The Task

One of our solutions has a multitude of tasks that can be run in the background (e.g. workflows, reports, data imports). When a user wanted to check the status of their tasks, they had to open a little list that contains every task they ran, and in case they were waiting for completion of a task, they had to click on refresh to update the list. This action put unnecessary load against our database, and our client asked if it would be better if we could display toast notification messages to the users on the page they are on at any time when their task is finished and if later on, we can use these notifications to pop up any informative message.

The Problem

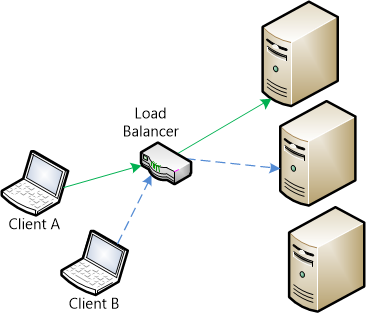

This particular application of ours is hosted on Azure and written in .Net. This meant that the obvious choice for us was to introduce SignalR. (Find out more here.) SignalR is a real-time WebSocket-based communication library that is made to handle tasks like this. Every client connects to a hub in the server that runs SignalR and holds the connection open so information can flow between the two parties really fast. This works perfectly fine on its own in an environment which has one server endpoint that the clients are talking to.

However, on Azure, we have multiple web roles that can respond to every request. This means that the client connects to a load balancer, not the server itself. The server which has to push the notification might not be connected to the client either. With the lack of an alive WebSocket connection, the server side of SignalR is not able to find the client it might need to notify. The situation gets even messier when we take more clients into consideration. This where the actions of one client would trigger a notification on another client that is connected to another server.

So the problem can be boiled down to one short question. How can we distribute the messages between our web role servers?

The Solution using SignalR

In order to overcome this problem, one can use a component called backplane that forwards messages between the servers. There are three out-of-the-box backplane providers available to use with SignalR:

- SQL Server.

- Azure Service Bus.

- Redis Cache.

The recommended service for Azure projects is the Redis Cache. Redis Cache is an in-memory (thus very fast) key-value store. Originally, this project was built on Linux. This meant that if anyone wanted to use it with Azure, they had to create a virtual machine or server that runs Linux and set-up Redis on that. It can still be done this way, but there is a much more convenient set-up for it now.

On the new Azure portal, click on Create > Data + Storage > Redis Cache, then specify a DNS name, pricing tier, and other settings, then click Create. After the cache has been created, browse to its blade on the portal, and check the settings. There are a number of options including SSL, ports, and diagnostics to configure. The other key tab on the blade is the Keys tab itself. Be sure to generate the primary and secondary keys before you start using the service.

Let’s get to the code now. First, add a new connection string to the configuration file, such as this:

<add name="RedisCache" connectionString="{the custom DNS name}.redis.cache.windows.net,ssl=true,password={primary or secondary key}" />

Then install the

Microsoft.AspNet.SignalR.Redis

Nuget package into the web role project. Add the following lines to the OwinConfig class (or the class which configures SignalR in the project):

public void Configuration(IAppBuilder app)

{

// ...

// get the connection string

var redisConnection = ConfigurationManager.ConnectionStrings["RedisCache"].ConnectionString;

// set up SignalR to use Redis, and specify an event key that will be used to identify the application in the cache

GlobalHost.DependencyResolver.UseRedis(new RedisScaleoutConfiguration(redisConnection, "MyEventKey"));

// ... map SignalR/hubs, e.g. app.Map("/signalr", map => map.RunSignalR(new HubConfiguration()));

}

And that is all it takes to wire Redis up to SignalR in Azure.

The Remarks

We are running this project under several different cloud service environments (different ones for production, user testing, QA, etc). Even though the price for an instance of a Redis cache is pretty cheap at the moment, the fact that we would use it for testing purposes is on a very low level, we decided that we would only go with one instance. Because of this and the fact that we’re using the same user ids for each environment in our databases, the following scenario can happen: a user who was working on the test environment got a notification from a report that he triggered in the production environment. This occurred because both test and production are set up to use the same cache. When a worker role in production pushed a message, it got distributed to all our cloud services – including test.

We overcame this problem by simply putting the current environment’s name in the server hub calls. On the client side then a Javascript function will check that value against its own variable containing the name. If they match up, we will show the toast notification. Otherwise, we do nothing. This way we retain the possibility to send out notifications to every user in every environment of our subscription if we ever need to do.

Get Coding!

Greenfinch Technology